Conscious, human-like machines are so popular in science fiction that it may come as a surprise to learn artificial intelligence researchers aren't even sure what it means to ask whether a computer will ever think.

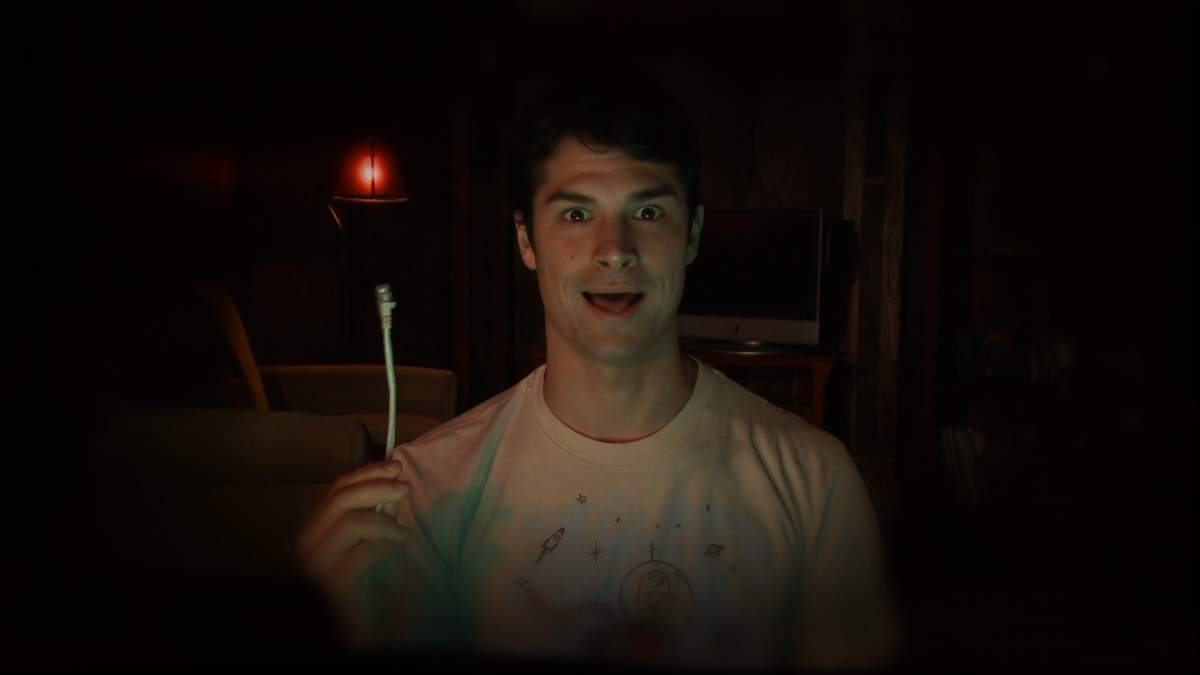

In the middle of the last century, British mathematician Alan Turing proposed the following test for determining whether a computer could think. Put the device in a room, he said, connected to the outside world only by a teletype machine. Then have a human experimenter hold a conversation with "whomever" is in the room only by means of typed messages. If the person sending and receiving messages cannot tell whether another human being or a machine is inside the sealed room, the machine passes its "thinking" exam.

This imaginary scenario is called "The Turing Test."

How can the ability to fool someone into thinking that a machine is a person prove the machine can think? Well, Turing suggested, isn't that the same criterion we apply to each other every day? You don't really know anything about what's inside someone else; all you know is how they respond.

What Turing really wanted to do with this scenario was suggest that the question "Can machines think?" is much too vague to be useful. Perhaps we should just ask: "Can machines act like humans?" It may be the same thing.

Does this make you uncomfortable? Many psychologists, philosophers and computer researchers aren't convinced by Turing's argument. Whether he was right or wrong, the "Turing Test" is a useful mind-game, as it gets us to question many of the things we think we know about thinking.